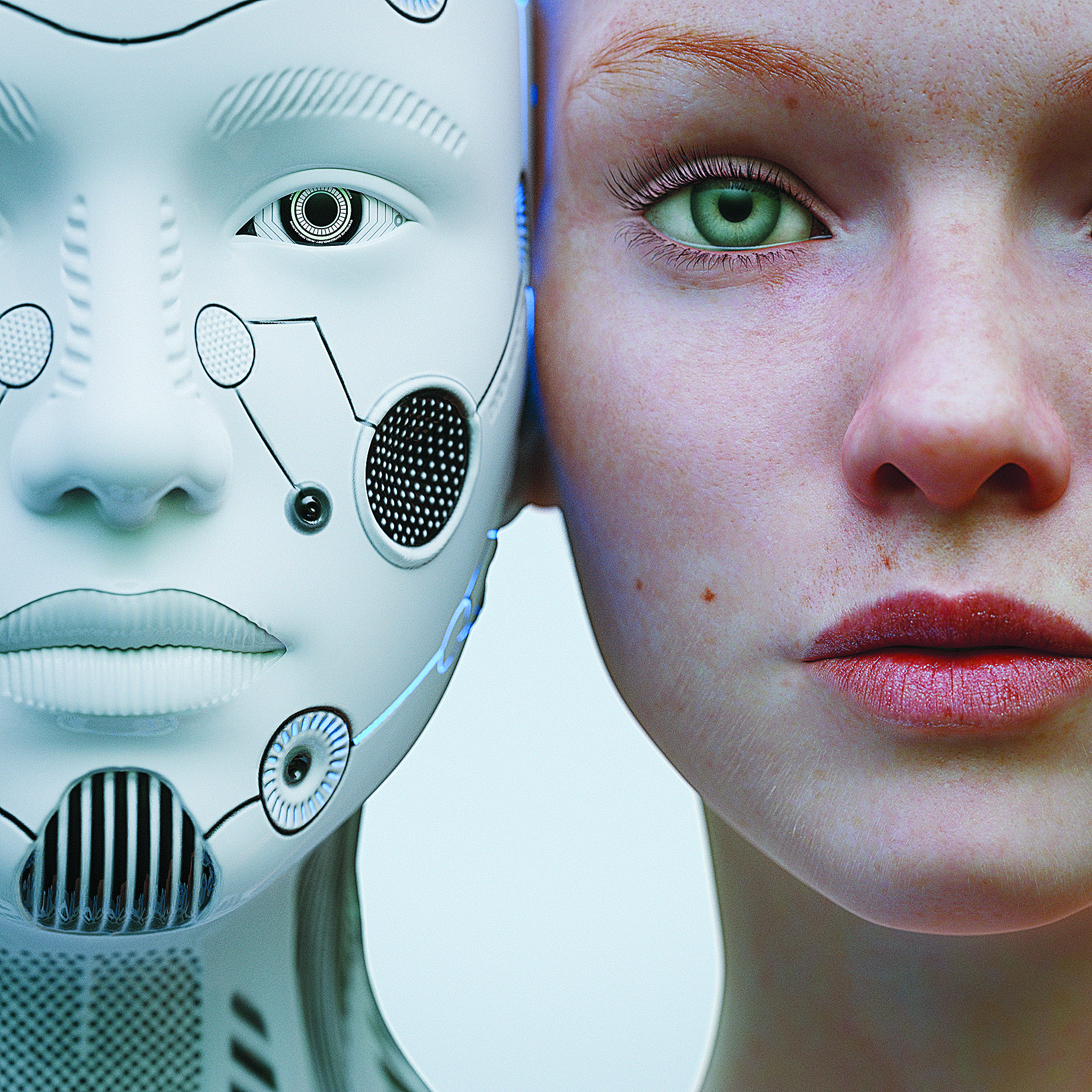

Will AI systems have rights or will they be our digital slaves?

American economist and intellectual presents his predictions to Kathimerini about intelligent machines

In a post-capitalist society, the integration of artificial intelligence into the economic system will dramatically reduce the role of the human factor. New systems for generating artificial thought and discourse such as ChatGPT, which compete with human cognition, are already creating a number of puzzles across the social sciences. What if these intelligent machines come to be smarter than their creators? How would our legal and economic system deal with them? Will they have rights or will they be our digital slaves?

Kathimerini sought answers from a renowned intellectual and anarcho-capitalist theorist, 78-year-old David Friedman. Son of one of the leaders of the Chicago school of economics, a neoclassical school of economic thought, the Nobel Laureate Milton Friedman (1912-2006), who was the mastermind behind Reagan’s and Thatcher’s economic policies in the early 1980s, David Friedman argues that societies can live harmoniously without centralized government. In our discussion, he attempts to foresee the challenges that societies will face with the rise of artificial intelligence.

Professor Friedman, could you briefly describe to us your views on the anarcho-capitalist system and the theory of “stateless” societies?

I have sketched in my first book, “The Machinery of Freedom,” that all of the useful functions of government should be provided privately. And to my mind, the core function is making an enforcing law – that is to say, enforcing individual rights, settling disputes and so forth. Ιn the system I sketched, it was done in a decentralized manner in which you had firms that sold the service of protecting the rights of their customers and settling their disputes. Each individual would choose to be a customer of one such firm. Each pair of firms, recognizing the potential for conflict, would agree in advance on an arbitrator.

On the other hand, neither firm wants to start shooting at each other because warfare is very expensive, so therefore it makes sense for both of them to pre-commit to accepting a private court, an arbitration agency, as it were, and following its results and verdicts. We actually have a sort of a real-world model of that in the modern world, in the case of auto insurance companies: So, if my car runs into your car, my insurance company would like to believe that you are at fault. Your insurance company would like to believe that I am at fault. And they really have two alternative ways of settling the dispute. One of them is to sue each other, to go to court. But that is a very expensive procedure. And the other is to have rules of thumb they have both agreed on by which they will decide which one is liable and which one is not. In modern-day America, those cases almost never go to court.

How could the fundamental principles of your ideas, like polycentric law, function in societies with increased legal and defense needs?

Regarding the legal needs. I do not see the problem. I have described how the legal system is handled on the market because rights enforcement is basically a private good. I am the one who benefits by having my rights protected, and I will be willing to pay a private firm to do that. But defense is not. And that is going to be a problem. Α while ago somebody pointed me towards a news story about one of the Baltic countries. I think it may have been Estonia, where a popular sport is training for guerrilla warfare. That is to say, they apparently have a fairly highly organized system and it is subsidized by the government, but it is mostly being done privately.

So, through some charitable donation, a small core of professionals could be supported, who would coordinate and then organize the warfare. But it is a difficult problem and I would be less optimistic about the implementation of my system in Estonia than I would be for the US, because the US has neighbors who are very much poorer and militarily weaker than it is, and therefore you do not have to raise a very large fraction of our power to defend ourselves. Besides which, the Canadians have shown no evidence of wanting to invade America, nor have the Mexicans. So, I think that my system would work better for a country which did not have powerful, aggressive neighbors.

Based on your book “Future Imperfect,” how is technology creating an invasive and imperfect world?

I actually discussed one of the problems in the book where the argument I was making there is that it may well be the case that we will create our computer programs that are the equivalent of humans. They are not going to be very much like humans because they are different kinds of things but are as smart as we are. And that is particularly scary. The problem is that they are getting smarter and we are not. And that therefore, if in 30 years we have human level AI, in 40 or 50 years, we are going to have AI much smarter than we are and we better hope they like pets. There are other worries as well. Various people I know worry about the possibility of a single AI reaching superhuman intelligence. The standard version of this, which I think Eliezer Yudkowsky came up with, is “the paperclip maximizer that somehow somebody makes an AI, which is supposed to maximize production of paperclips and it makes a smarter AI doing that and it makes us still smarter and eventually have an AI so smart that it can control the world by tricking people into doing what it wants.” And it turns out that human beings are not really the best way of making paperclips. So, it eliminates the human beings.

In humans the existence of consciousness is the first fact we are aware of. Everything else filters through that. And it may be that we cannot just do it by programming a computer. The things that have been impressing people so far, ChatGPT and its progeny, do not really seem like people at all. They seem like clever ways of using the work of people, namely the whole body of text, in order to pretend to be a person. But that is a very different thing than actually being a person.

What will be the future of the capitalist system in a robotic world, transformation or disorder?

If you mean a literally robotic world, if you mean a world where we have been replaced by AIs, my guess is that they will have the same coordination problem we do and therefore in an imaginary future fiction world where there are no humans, only robots, that those robots will probably trade with each other, contract with each other. Maybe even set up robot corporations. Because the fundamental logical problem of a whole bunch of individual actors, each of whom has his own objectives, trying to coordinate exists for them, as it exists for us. Therefore, I do not see any interesting issues that are raised by AI as long as it is just machines. And the interesting issues are raised when the AI become people, if they ever do. And then you have to say, how do you integrate them into your legal system? Are they slaves? Excel is a slave? So, the same applies for Word, which sometimes is a disobedient slave when it does what I do not want it to do. But as long as they are not people, maybe they will be slaves. After all, humans have had slaves before and that is not a very attractive system. But if it is really running on your computer and you can turn it off anytime you want, maybe that person inside your computer had better do what you tell it to. The more interesting outcome is establishing a world where they get treated as people too, and where you then have a legal system that somehow takes account of the differences between a computer program and a flesh and blood person.

Do you foresee two capitalist systems, one which involves the human factor and one which is going to involve the robotic factor, or one robotic capital system which will incorporate both?

As I say, you want to think about whether the robots are people, whether the AI are people, because a robot really suggests a machine with a body. And AI may or may not have bodies, but if the AI is not people, then you have got one economic system of human beings using machines. If the people have their own system, you would think that you would then have an economic system in which people buy things from AIs and AIs buy things from people. And, you know, humans employ computers and computers employ humans and, after all, we have men and women in our present system and we manage to incorporate them in a single economy. Then, will the computer really be that much more different than my wife? No, probably.