‘People will get used to judgments being done by machines’

Nobel laureate voices concern to Kathimerini about the age of AI autonomy and the impact it may have on the role of humans

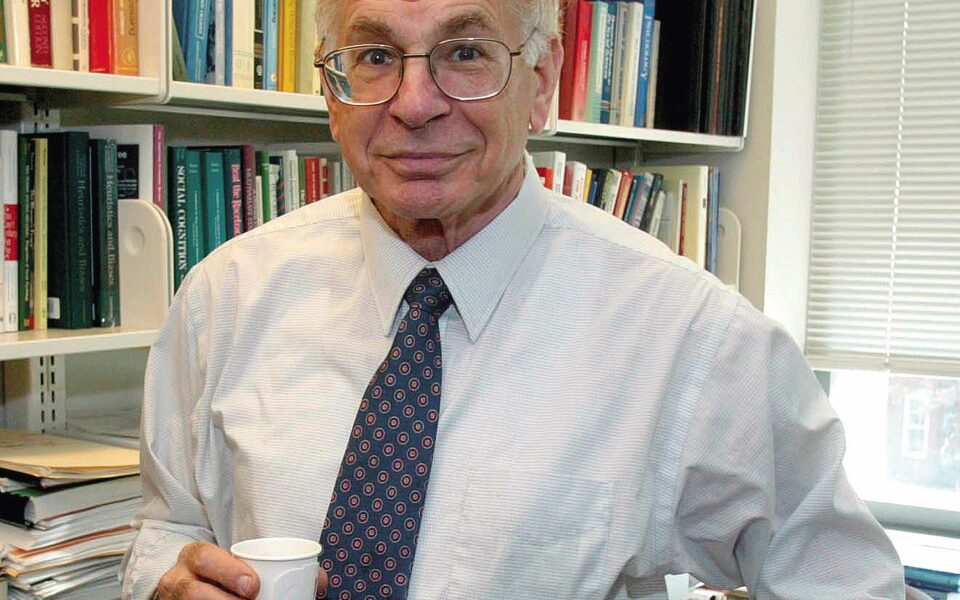

How would you feel if two judges imposed different sentences for the same offense? Or if two radiologists reached different diagnoses for the same x-ray? Or, even worse, if the same judge or the same doctor decided differently on similar cases, depending on their mood or fatigue? These variations in human judgment in very important areas are what Nobel Prize-winning psychologist and economist Daniel Kahneman and his colleagues have defined as “noise.” The Princeton University professor of psychology and public affairs emeritus, founder of modern behavioral economics and author of the golden bible of business management consultants (“Thinking, Fast and Slow”) synthesizes an analytical approach to this noise, the factors that make human judgment fickle, and suggests ways to reduce the errors we have come to regard as systemic (“Noise: A Flaw in Human Judgment”).

Speaking to Kathimerini, Kahneman responds to the dilemmas arising from the rapid development of artificial intelligence, such as ChatGPT, and makes no secret of his concern about the age of AI autonomy and the impact it may have on the role of humans.

How do you define “noise” and how does it affect the decision-making process?

We speak of noise in the context of multiple decisions making. So, there can be many decisions about different things or many decisions about the same thing. Actually, it is easier to think about them as judgments or measurements. So, you either measure different things or you measure the same thing many times. And there is the concept of error. The average of these errors is what we call bias. So bias is a predictable average error. But the errors are not all the same. Some are large, others are small, some are positive, others are negative. The technical definition of my description is the “variability of errors.” It is very unfortunate because the word “noise” has many different meanings and it is very easy to forget what the technical meaning is. But the technical meaning is simply “variability of errors,” that you do not want undesirable variability in judgments. Strictly speaking, it is “undesirable variability in judgment errors.”

Do you think we could avoid noise if we delegated decision-making to machines?

In the past, you could say that the sorts of mathematical algorithms had no noise, because whenever you’d ask them the same question, you’d get the same answer. This is not true in modern algorithms of artificial intelligence. If you ask ChatGPT the same question twice, you will not get exactly the same answer. Therefore, there is some noise, but there is certainly much less noise in algorithmic judgments, even when it is a simple combination of variables, there is much less noise than in human judgment.

Would a person trust the imposition of justice to an inanimate being, like a machine?

I think it would be difficult at first. But this is something that people could get used to. For example, I have heard that in China they are experimenting with bank judgments being made by artificial intelligence. And you can see immediately that in that narrow domain, this is clearly a better way to go than using judges. Therefore, the way that people will get used to judgments being done by machines is going to be a gradual process. But I would not be surprised if the use of artificial intelligence expands. At the moment, people want a doctor and they want a doctor to tell them what is going on. What will happen is that if the algorithm says one thing and the doctor says another, people will come into conflict. I mean, they can easily imagine the algorithm being right and the doctor being wrong. And I believe eventually algorithms will be right much more often than doctors will be, then eventually people will trust the algorithms. All this will take time. But I do not believe that it is impossible for people to trust algorithms.

If we are affected by noise, how could we develop a fair algorithm that combines justice and equity and incorporate these commands into a machine?

We are a long way from there. But as in the case of bankruptcy, for example, the approach is pretty straightforward because there are rules and you can write a program that will execute the rules and the law is quite specific. So, in cases like bankruptcy, there is a solution and you can program the algorithm, which will be just and equitable. However, for cases where the issue is to determine who is telling the truth, we are a long way from having algorithms do it, but we know that people do not do it very well either. Therefore, there is certainly room for improvement in the way that judges operate.

Are you concerned about the rapid development of artificial intelligence, like ChatGPT, without human limits?

Of course I am concerned. I think everybody who follows that situation is concerned because, in the short term, it can be used in ways that are going to make journalism almost impossible because it would be so difficult to distinguish the truth from the fake. So that is only one problem. And there are dozens of problems. And in the long run, living with a superior intelligence is a big threat. So, I think many people are concerned, and so am I.

If you had the power to impose a rule on the AI algorithm, what would it be?

Well, there is a computer scientist at Berkeley, Stuart Russell, who is trying to ensure what is called “alignment,” meaning that AI will have objectives that conform to human objectives. And that turns out to be a difficult technical problem. It is not simple to make sure that an algorithm will actually be beneficial, even if you give it an objective, you give it a goal. The problem that many people are concerned with is that AI might decide on some goals on the way to the goal, where the sub-goals actually involve harming people. If the artificial intelligence is highly advanced, it will be highly autonomous. So those are some of the terrible scenarios that people are worried about.

Is it reasonable to expect to improve human cognition through the use of artificial intelligence?

Artificial intelligence is going to be very helpful. It is already very helpful to many people. I mean, that is clear. But the problem is that for many professions, humans may need artificial intelligence, but artificial intelligence may not need humans. That is the problem. And you know, it is the case for playing chess already, where the software is much better than the best human players. And in any domain where artificial intelligence makes the same judgments as people on the basis of the same information, artificial intelligence will always be absolutely superior to people.

– What would be your advice for the younger generations, also called the Instagram generations, who are constantly affected by noise?

I have no advice for the younger generation. You know, it would take knowledge about the future to give advice. And one thing I am quite sure is that I have no idea what is going to happen in the future, so I do not have any advice. And that is actually a good final answer.